Azerbaijani wrestlers advance at Zagreb World Championship

Saudi Crown Prince and Macron discuss the two-state solution

Trump to hold talks with Syrian leader Al-Sharaa

Pashinyan on the Ararat location misconception

Poland scrambles jets after Russian strikes near border

Armenian Economy Minister to visit the US for the TRIPP project

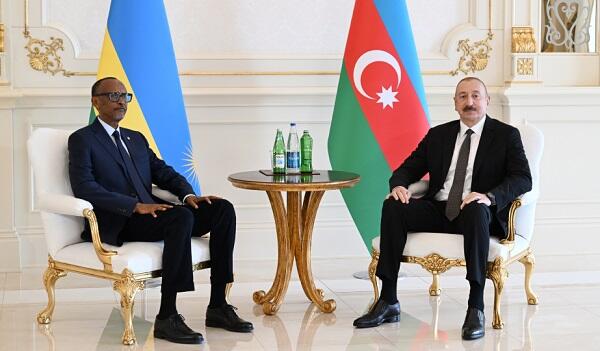

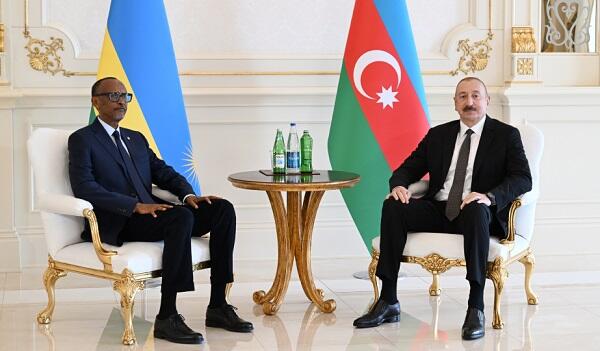

Ilham Aliyev shares a post on a meeting with Kagame - Video

Netanyahu urges US pressure on Egypt

Yushchenko: Ukrainians should march on Moscow

White House: TikTok deal with China to be signed soon

Iranian President: Sanctions won’t stop us

Azerbaijani Chief of Staff begins visit to Serbia - Photo

Boeing nears huge deal before Erdogan-Trump talks

Russia reports updated Ukrainian losses on day 1305

Stormy weather hits Azerbaijan

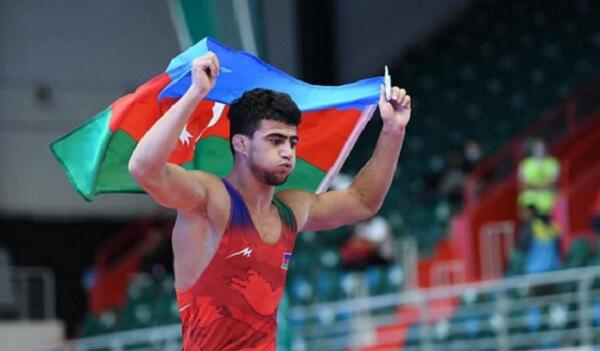

Ilham Zakiyev wins 9th European title

Rwandan President visits Azerbaijan’s ASAN Service Center

F-35 sales to Turkiye could resume if the US lifts sanctions

Turkiye may act if SDF/YPG fails integration

YouTube blocks the Venezuelan President's official channel

The next migration caravan reached Kalbajar

38 emergency vehicles deployed for Baku F1 races - Photo

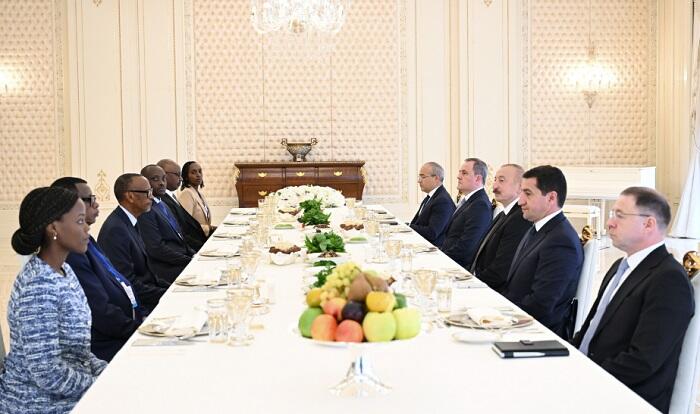

The presidents held an extended meeting over lunch

Formula 1 Azerbaijan Grand Prix contract extended until 2030

Rainy and windy weather is expected tomorrow

.jpg)

Lando Norris leads final F1 practice

Azerbaijan and Rwanda presidents make statements to press - Photo

Ilham Aliyev holds a one-on-one meeting with Paul Kagame